By Jeffrey Carl

Boardwatch Magazine was the place to go for Internet Service Provider industry news, opinions and gossip for much of the 1990s. It was founded by the iconoclastic and opinionated Jack Rickard in the commercial Internet’s early days, and by the time I joined it had a niche following but an influential among ISPs, particularly for its annual ranking of Tier 1 ISPs and through the ISPcon tradeshow. Writing and speaking for Boardwatch was one of my fondest memories of the first dot-com age.

In a Nutshell: The original hype over open-source software has died down – and with it, many of the companies built around it. Open-source software projects like Linux, *BSD, Apache and others need to face up to what they’re good at (power, security, speed of development) and what they aren’t (ease of use, corporate-friendliness, control of standards). They will either have to address those issues or remain niche players forever while Microsoft goes on to take over the world.

The Problem

There is a gnawing demon at the center of the computing world, and its name is Microsoft.

For all the Microsoft-bashing that will go on in the rest of this column, let me state this up front: Microsoft has done an incredible job at what any company wants to do – leverage its strengths (sometimes in violation of anti-trust laws) to support its weaknesses and keep pushing until it wins the game. That’s the reason I hold Microsoft stock – I can’t stand the company, but I know a ruthless winner when I see it. I hope against reason that my investment will fail.

It has been nearly two years since I wrote a column that wasn’t “about” something, that was just a commentary on the state of things. Many of you may disagree with this, and assign it a mental Slashdot rating of “-1: Flamebait.” Nonetheless, I feel very strongly about this, and I think it needs to be said.

Here’s the bottom line: no matter how good the software you create is, it won’t succeed unless enough people choose to use it. Given enough time and the accelerating advances of other software, I guarantee you it will happen. You may not think this could ever be true of Linux, Apache, or any other open-source software that is best-of-breed. But ask any die-hard user of AmigaOS, OS/2, WordPerfect, and they’ll tell you that you’re just wishing.

Sure, there plenty of reasons these comparisons are dissimilar; Amiga was tied to proprietary hardware, and OS/2 and WordPerfect are the properties of companies which must produce profitable software or die. “Open-source projects are immune to these problems,” you say. Please read on, and I hope the similarities will become obvious.

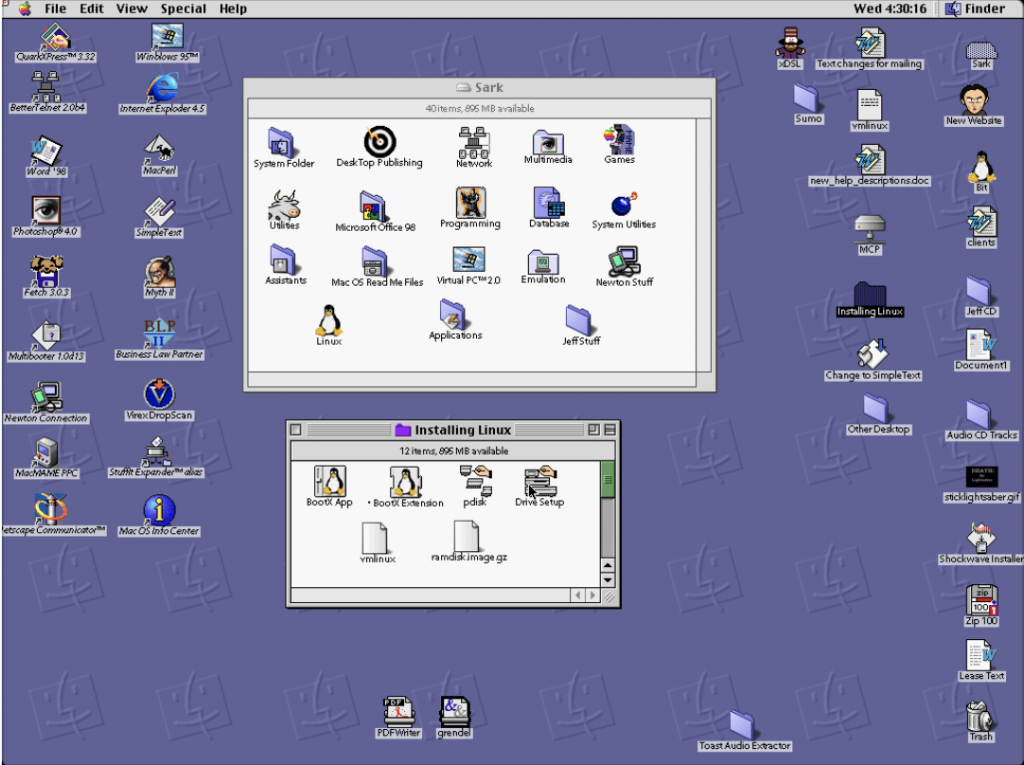

For the purposes of this column, I’m going to include Apple and MacOS among the throng (even though Apple’s commitment to open source has frequently been lip service at best), because they’re the best example of software that went up against Microsoft and failed.

You say it’s the best software out there for you – so why does it care what anyone else thinks? It doesn’t, at first. But, slowly, the rest of the world makes life harder for you until you don’t have much choice.

In the Software Ghetto

Look at Apple, for example (disclaimer: I’m a longtime supporter of MacOS, along with FreeBSD and Linux). My company’s office computers are all Windows PCs; my corporate CIO insisted, despite my arguments for “the right tool for the job,” that I couldn’t get Macs for my graphics department. “We’ve standardized on a single platform,” is what he said. He’s not evil or dumb; it’s just that Windows networks are all he knows and are comfortable with.

Big deal, right? Most Mac users are fanatics. There’s a registered multi-million-person-plus community out there of fellow Mac-heads that I can always count on to keep buying Macs and keep the platform alive forever, right? An installed base of more than 20 million desktops plus sales of 1.5 million new computers in the past year alone is enough for perpetual life, right?

Sure, until that number is fewer than the number of licenses that Microsoft decides that it needs to keep producing MS Office for Mac. Right now, the fact that my Mac coexists with the Windows-only network at my office, because I can seamlessly exchange files with my Windows/Office brethren. But as soon as (which Microsoft could easily do by upgrading windows with a proprietary document format that can’t be decoded by other programs without violating the DMCA or something asinine like that) platform-neutral file-sharing goes out the Window (pun intended) … I’m going to have to get a Windows workstation.

Or Intuit decides there’s just not enough users to justify a Mac version of Quicken. Or, several years from now, Adobe decides it’s just not profitable to make a Mac version of Photoshop, InDesign or Illustrator … or even make critical new releases six months or a year behind the Windows version. I can still keep buying Macs … but I’ll need a Windoze box to run my critical applications. As more people do this, Apple won’t have the revenues to fund development of hardware and software worth keeping my loyalty.. And I’ll keep using the Windows box more and more until I finally decide I can’t justify the expense of paying for a computer I love that can’t do what I need.

“Apple,” you say, “is a for-profit company tied to a proprietary hardware architecture! This could never happen to open-source software running on inexpensive, common hardware!”

Open Source with a Closed Door

Let’s step back and look at Linux. A friend of mine works as a webmaster at a company that recently made a decision about what software to use to track website usage statistics. His boss found a product which provided live, real-time statistics – which only ran on Windows with Microsoft IIS. My friend showed off the virtues of Analog as a web stats tool, but they were too complicated for my friend’s boss to decipher. Whatever arguments my friend provided (“Stability! Security! The Virtues of Open Development!”) were simply too intangible to outweigh the benefits his boss wanted, which this one Windows/IIS-only software package provided. So, they switched to Windows as the hosting environment.

There may come a day when you suggest an open-source software solution (let’s say Apache/Perl/PHP) to your boss or bosses, and they ask you who will run it if you’re gone. “There are plenty of people who know these things,” you say, and your boss says, “Who? I know plenty of MCSEs we can hire to run standardized systems. How do we know we can hire somebody who really knows about ‘Programming on Pearls’ or running our website on ‘PCP’ or whatever you’re talking about? There can’t be that many of them, so they must be more expensive to hire.” Protest as you might, there isn’t a single third-party statistic or study you can cite to prove them wrong.

If you ask the average corporate IT manager about open source, they’ll point to the previous or imminent failures of most Linux-based public companies as “proof” that open-source vendors won’t be there to provide paid phone support in two years like Microsoft will.

I’m willing to bet that most of you out there can cite examples of the dictum that corporate IT managers don’t ever care about the costs they will save by using Linux. They are held responsible to a group of executives and users that aren’t computer experts, aren’t interested in becoming computer experts, and wouldn’t know the virtues of open source if it walked up and bit them on the ass. They want it to be easy for these people, and fully and seamlessly compatible with what the rest of the world is using, cost be damned. Say what you will – right now, there’s just no logical reason for these people not to choose Windows.

So maybe Linux users drop down to the point where Mac users have (still a significant number) – only the die-hard supporters. But how many of you Linux gurus out there don’t have a separate Windows box or boot partition to play all the games you like that aren’t developed for Linux because of lack of users/market share? Well, what about the next killer app that’s Windows-only until you use Linux less and less? Or the next cool web hosting feature that only MS/IIS has? Or as more MS Internet Explorer-optimized websites appear?

I’m not arguing that Linux or BSD would ever truly disappear (there are still plenty of OS/2 users out there). I am, however, saying that as market share erodes, so does development; and, over the long run – if things continue on the present course – Windows has already won.

The main point is this: niche software will eventually die. It may take a very long time, but it eventually will die. Mac or Linux supporters claim that market share isn’t important: look at BMW, or Porsche, which have tiny market shares but still thrive. The counterpoint is that if they could only drive on compatible roads, and the local Department of Transportation had to choose between building roads that 95% of cars could ride on or building separate roads for these cars, they would soon have nowhere to drive. True, Linux/BSD has several Windows ABI/API compatibility projects and Macs have the excellent Connectix VirtualPC product for running Windows on Mac, but very few corporate IT managers or novice computer users are going to choose those over “the real thing.” And I’m willing to bet that those two groups make up 90% of the total computer market.

You can argue all you like that small market share doesn’t mean immediate death. You’re right. But it means you’re moribund. One of the last bastions of DOS development, the MAME arcade game emulator, is switching after all these years to Win32 as its base platform – because the lead developer simply couldn’t use DOS as a true operating platform anymore. It will take time, but it will happen. Think of all the hundreds of thousands (if not millions) of machines out there right now running Windows 3.11 for Workgroups, OS/2, VMS, WordPerfect 5.1, FrameMaker, or even Lotus 1-2-3. They do what they do just fine. But, eventually, they’ll be replaced. With something else.

The Solution

All this complaining aside, the situation certainly isn’t hopeless. The problems are well known; it’s easier to point out problems than solutions. So, what’s the answer?

For that 90% of users that will decide marketshare and acceptance, two things matter: visible advantages in ease of use, or quantifiable bottom-line cost savings. Note for example how Mac marketshare declined from 25% to less than 10% when the “visible” ease-of-use differential between Mac System 7 and Windows 95 declined. Or, look at how the cost of more-expensive Mac computers and fewer support personnel versus cheaper Windows PCs and more (but certified with estimable salary costs) support personnel.

Open-source software development is driven by programmers. Bless their hearts, they create great software but they’re leading it to its eventual doom. They need to ally firmly with their most antithetical group: users. Every open-source group needs to recruit (or conversely, a signup is needed by) at least one user-interface or marketing person. Every open-source project that doesn’t have at least one person asking the developers at all steps “Why can’t my grandmother figure this out?” is heading for disaster. Those that do are making progress.

Similarly, those open-source software projects that have proprietary competitors and are dealing with some sort of industry standard that aren’t taking a Microsoft-esque “embrace, extend” approach are going to fall behind. If they don’t provide (and there’s nothing against making these open and well-documented) new APIs or hooks for new features, Microsoft will when they release a competing product (and, believe me, they will; wait until Adobe has three consecutive bad quarters and Microsoft buys them). The upshot of this point is that open-source projects can’t just conform to standards that others with greater marketshare will extend; they need to provide unique, fiscally-realizable features of their own.

Although Red Hat has made steps in this direction, other software projects (including Apple, Apache, GNOME, KDE and others) should work much harder to provide some rudimentary for of certification process to provide some form of standardized qualification. Otherwise, corporate/education/etc. users will have no idea what it costs to hire qualified support personnel.

Lastly, those few corporate entities staking their claims on open source should be sponsoring plenty of studies to show the quantifiable benefits of using their products (including the costs of support personnel, etc.). The concepts of “ease of use” or “open software” don’t mean jack to anyone who isn’t a computer partisan; those who consider computers to merely be tools must be shown why something is better than the “safe” choice.